Today, The Guardian published a story falsely claiming that WhatsApp’s end-to-end encryption contains a “backdoor.”

Background

WhatsApp’s encryption uses Signal Protocol, as detailed in their technical whitepaper. In systems that deploy Signal Protocol, each client is cryptographically identified by a key pair composed of a public key and a private key. The public key is advertised publicly, through the server, while the private key remains private on the user’s device.

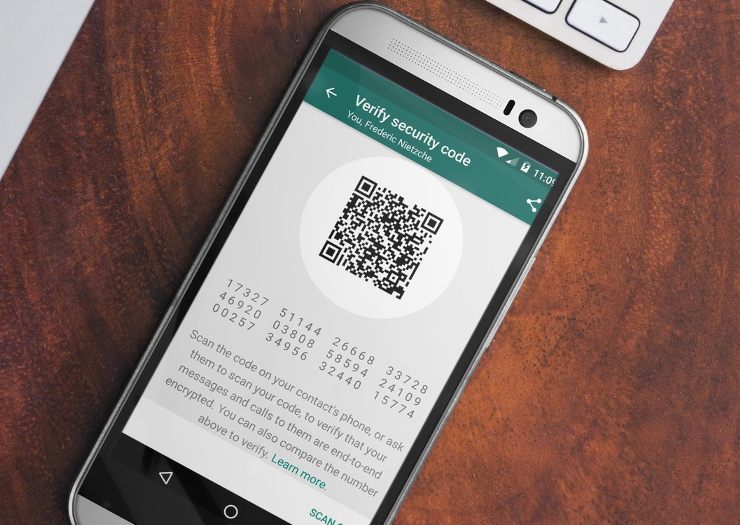

This identity key pair is bound into the encrypted channel that’s established between two parties when they exchange messages, and is exposed through the “safety number” (aka “security code” in WhatsApp) that participants can check to verify the privacy of their communication.

Most end-to-end encrypted communication systems have something that resembles this type of verification, because otherwise an attacker who compromised the server could lie about a user’s public key, and instead advertise a key which the attacker knows the corresponding private key for. This is called a “man in the middle” attack, or MITM, and is endemic to public key cryptography, not just WhatsApp.

The issue

One fact of life in real-world cryptography is that these keys will change under normal circumstances. Every time someone gets a new device, or even just reinstalls the app, their identity key pair will change. This is something any public key cryptography system has to deal with. WhatsApp gives users the option to be notified when those changes occur.

While it is likely that not every WhatsApp user verifies safety numbers or safety number changes, the WhatsApp clients have been carefully designed so that the WhatsApp server has no knowledge of whether users have enabled the change notifications, or whether users have verified safety numbers. WhatsApp could try to “man in the middle” a conversation, just like with any encrypted communication system, but they would risk getting caught by users who verify keys.

Under normal circumstances, when communicating with a contact who has recently changed devices or reinstalled WhatsApp, it might be possible to send a message before the sending client discovers that the receiving client has new keys. The recipient’s device immediately responds, and asks the sender to re-encrypt the message with the recipient’s new identity key pair. The sender displays the “safety number has changed” notification, re-encrypts the message, and delivers it.

The WhatsApp clients have been carefully designed so that they will not re-encrypt messages that have already been delivered. Once the sending client displays a “double check mark,” it can no longer be asked to re-send that message. This prevents anyone who compromises the server from being able to selectively target previously delivered messages for re-encryption.

The fact that WhatsApp handles key changes is not a “backdoor,” it is how cryptography works. Any attempt to intercept messages in transit by the server is detectable by the sender, just like with Signal, PGP, or any other end-to-end encrypted communication system.

The only question it might be reasonable to ask is whether these safety number change notifications should be “blocking” or “non-blocking.” In other words, when a contact’s key changes, should WhatsApp require the user to manually verify the new key before continuing, or should WhatsApp display an advisory notification and continue without blocking the user.

Given the size and scope of WhatsApp’s user base, we feel that their choice to display a non-blocking notification is appropriate. It provides transparent and cryptographically guaranteed confidence in the privacy of a user’s communication, along with a simple user experience. The choice to make these notifications “blocking” would in some ways make things worse. That would leak information to the server about who has enabled safety number change notifications and who hasn’t, effectively telling the server who it could MITM transparently and who it couldn’t; something that WhatsApp considered very carefully.

Even if others disagree about the details of the UX, under no circumstances is it reasonable to call this a “backdoor,” as key changes are immediately detected by the sender and can be verified.

The reporting

The way this story has been reported has been disappointing. There are many quotes in the article, but it seems that The Guardian put very little effort into verifying the original technical claims they’ve made. Even though we are the creators of the encryption protocol supposedly “backdoored” by WhatsApp, we were not asked for comment.

Instead, most of the quotes in the story are from policy and advocacy organizations who seem to have been asked “WhatsApp put a backdoor in their encryption, do you think that’s bad?”

We believe that it is important to honestly and accurately evaluate the choices that organizations like WhatsApp or Facebook make. There are many things to criticize Facebook for; running a product that deployed end-to-end encryption by default for over a billion people is not one of them.

It is great that The Guardian thinks privacy is something their readers should be concerned about. However, running a story like this without taking the time to carefully evaluate claims of a “backdoor” will ultimately only hurt their readers. It has the potential to drive them away from a well-engineered and carefully considered system to much more dangerous products that make truly false claims. Since the story has been published, we have repeatedly reached out to the author and the editors at The Guardian, but have received no response.

We believe that WhatsApp remains a great choice for users concerned with the privacy of their message content.